A few thoughts on R packages

December 3, 2018

R package development GitHub CRAN rOpenSci

Table of Contents

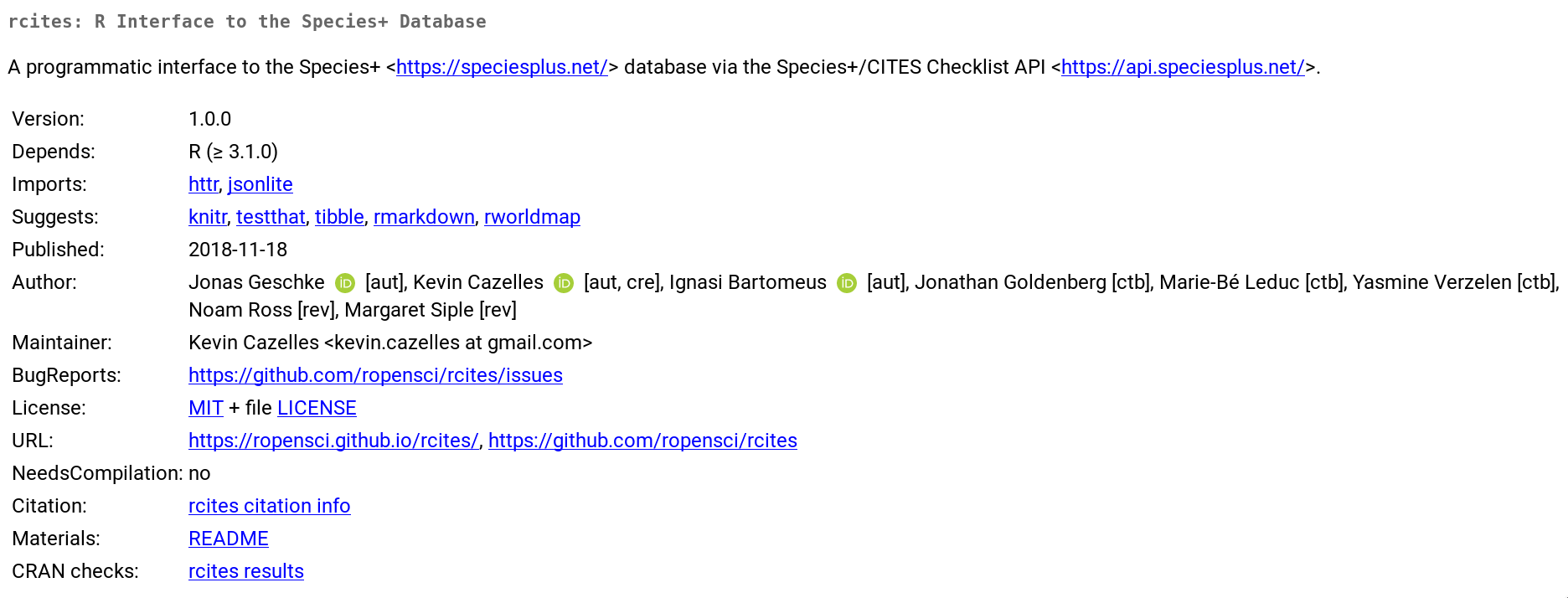

Jonas Geschke, Ignasi Bartomeus and I have recently released rcites v-1.0.0 on CRAN, a R client for the Species+ API after it has been reviewed by rOpenSci. In this post, I would like to share this recent experience as well as several valuable resources.

What is an R package?

In the introduction of his book R packages, Hadley Wickham wrote:

A package bundles together code, data, documentation, and tests, and is easy to share with others.

I like the way the famous R developer presents a package, it is straightforward and crystal clear. Interestingly enough, it describes what a package does, not what a package is. Defining an R package is actually harder than I thought! I remember the first time, I’ve tried to characterize an R package, I tried to answer a couple of questions and found the answers quite surprising:

- Are R packages extensions available on CRAN or Bioconductor? Not necessarily!

The answer sounds obvious nowadays with over than 40K R packages on Github, but 10 years ago it wasn’t. That said, we shall recall that an R package that only exists on a single computer still is an R package.

- Should a package have a minimum number of functions? No

Even among popular packages, the number of functions is not a criterion. For instance, vegan exports 192 functions (as of version 2.5.2) while png only includes two functions.

- Should a package be designed to perform a specific analysis? No

Two comments here. First, R packages do many things: they can turn R into a powerful Geographic Information System or make your R session crash every time you load it (see trump)! Packages such as ape provide try to be as exhaustive as possible for a certain type of analysis while other such as Hmisc are sets of miscellaneous functions. Second, it sometimes make more sense for development purposes to split the full pipeline into several packages so that more than one package are required for certain analysis.

So what is an R package? Well, my own definition would be something along this line:

A set of files that passes the command

R CMD INSTALL.

From this definition, one should understand that an R package does not perform any analysis correctly. Rather, an R package is an organized set of files that passes a set of tests, a lot of tests!

Why write an R package?

This is another crucial question. In his introduction,

Hadley Wickham emphasized that R packages are a standard way to organize your

project: it saves you time, gives you an opportunity to acquire good practices when writing functions and makes the sharing of your code easy.

Once you get used to create and handle R packages, it becomes natural to use

the standard file organisation to structure your project. With this habit, the question “where is this file?” vanishes and you stop using setwd(). Note that, some authors propose similar structure of R projects, e.g. this post on nicercode, but you’ll need to tweak a little bit if you want to test your code with standard tools.

I would like to point out that one of the main reasons of creating a package is for efficiency purposes, not necessarily to submit it to the CRAN. To me, this is extremely important. This means that you do not need to have a good reason to write a package, if you work regularly with R you can write packages, whenever you decide to turn your set of functions for a particular project into an actual package, then the step to do so will be small. Of course the sharing of your package is an important question, but I think this is different question: If you write a package it does not imply you’ll share it.

Why share your R package?

There are different answers to this question and depending on the answer, you may consider different options to share your code. I do not intend to be exhaustive here rather I provide three examples I am familiar with.

1- My personal helper functions

When I keep repeating the same lines of code in a project, I write a function.

Sometimes, the function can actually be used in different projects. I used to write this kind of function in a dedicated .R file that was loaded during my custom startup process (see ?Startup in R). Three years ago, I decided to make a package out of these functions. There were three main reasons for doing so. First, I was still learning how to write packages, so this was another opportunity to practice. Second, I wanted to document these functions. Last but not least, I thought these functions might be useful to other people so writing an R package was an efficient way to share them. The package is now on GitHub: inSilecoMisc, everyone can use it, borrow one or more functions for his own project, report issues, etc. Even though the package is exhaustively tested, inSilecoMisc remains a collection of sub-optimal functions which according to me does not deserve to be published on the CRAN, GitHub and the additional web services I use cover all my needs!

2- The code to reproduce a paper

Reproducible science matters to me, when I publish a paper I cannot see any reason to not share the analysis pipeline with the rest of the scientific community: it demands a little more of my time to save a huge amount of time for them. To do so, I now create a small package that contains the analysis. The first time I did so was for a paper we did with a couple of colleagues where we compared media coverage for climate change and biodiversity. Unfortunately, we were not able to make the gathering of data reproducible (believe me, we’ve tried, the only solution was to work on private API, so, making the code not reproducible for the majority of researchers), we however turned the data collected into .Rdata files and the set of functions were stored on a GitHub repository: burningHouse. This ensures the interoperability of the package, shows to other researchers how we did it the analysis and let them report issues with the code. Moreover, Zenodo makes it simple to assign a DOI to the repository so that people can reference this work if needed. Note that it becomes quite common for researchers to share their code that way, see for instance this repository associated with this nice paper about the girl power in the clans of Hyenas.

3- A R-Client for a web API

A year ago now, I was in a hackathon in Ghent (my first hackathon) and I sat on the same table as Jonas Geschke, Ignasi Bartomeus (among other colleagues). We worked intensively on a prototype of a package listed in the wishlist of rOpenSci: an R-Client to the CITES-API. After we completed the first version, we submitted it to the review process of rOpenSci. And now rcites is part of rOpenSci (more about this story here). So here is a tangible example where the package is worth making the CRAN (I think so) and even a dedicated organization (rOpenSci) on GitHub. The package is general, has a specific goal, helps people retrieving data to do science and helps them to do it in a reproducible way. So it should be broadly shared. Plus, making the package part of an organization such as rOpenSci means that the package will likely keep being maintained properly.

How to proceed?

Below, I give a brief overview of how we proceeded for rcites. Note that if you are interested in writing an R package, you must read more about what I am quickly mentioning below, so scroll down to the end of this post where I listed a couple of very helpful resources. After 3 years of experience with packages, I do not frequently have to look at documentation, but when I do, I always find the answers in R packages or Advanced R both by Hadley Wickham or in the exhaustive and technical documentation Writing R extensions available on the CRAN.

Local development

I personally use the set of packages developed by Hadley Wickham and colleagues. His book R packages explains how to write your package with them. Installing these packages and start working on package is a simple as the line of command below:

|

|

Briefly:

- roxygen2: document the functions;

- testthat: test your code;

- knitr: build the vignette;

- devtools: rule them all! (version 2.0 has recently been released).

In a nutshell, the workflow with this setup looks like this:

1-"fix/write code" => 2-load_all() => 3-document() => 4-test() => 5-check()

Basically, you follow these steps for every feature you are adding to your package and go back to step 1- if one of the subsequent steps fails. The package usethis is also worth mentioning as it automates many steps of the process. Moreover, package.skeleton()

creates a package skeleton for you and Dirk Eddelbuettel has extended the function for packages that are linked to Rcpp, see

Rcpp.package.skeleton().

I would like to conclude this part by pointing out that using the set of packages Hadley Wickham and colleagues isn’t mandatory, but remember, their developers have cleverly automated many steps for writing R packages, so why not use the fruit of their labor?

Version control and Continuous Integration

Web-based -repository managers such (GitHub , GitLab , Bitbucket , etc.) are very powerful tools to develop your packages especially to work with collaborators, get feed back and add web-services to improve your workflow. First, it-self is a fantastic tool (beyond the scope of this post, I recommend you the reading of Pro Git). Second, by hosting your package on GitHub, your are basically sending an invitation at large to contribute to your project: “hey devs, this is the project I am working on, if you are interested, please star, clone, fork, report issues, submit pull requests, etc.”! Furthermore, once on GitHub, people can easily install your package directly from R:

|

|

Last, the number of web-services you can add to your repository is tremendous (sign in with GitHub and let the protocol OAuth 2.0 do the rest). There are two kind services I always use for all my R packages: continuous integration (CI) and code coverage. I would like to expand a little bit on this two.

Continuous integration

I personally use Travis that covers MacOS + Ubuntu and AppVeyor for Windows. As continuous integration services they allow me to check that my package can be seamlessly built on different systems freshly installed, for a specific version of R. I am very grateful to developers that makes the use of this service really easy!

Code coverage

The code coverage is the percentage of coding lines checked by your unit testing. Unit testing plays two critical roles. First, it checks that your code do what you want it to do. Second, once your tests are already written, they are extremely useful when you are fixing a bug, restructuring the code or adding new features as you can immediately be aware whether or not the changes you are doing affect the behavior of the other part of your package and therefore unit testing avoids multiplying bugs via side effects brought by the new lines of code. I personally use testhat to write my tests and codecov to show the code coverage and benefit from the neat visualizations it automatically produces (see for instance the results for rcites). I must also mention that I’ve recently tried coveralls and I like its design very much, so I may use it more in a near future! Many thanks to Jim Hester for his work on the integration of these services for R packages.

License

Even though I am no expert on this topic, I can tell that choosing your license matters it specifies what people can do with your code. I recommend you to go and visit this website: “ChooseALicense” and if this question is critical to your project, you should better ask somebody that is knowledgeable about Intellectual Property (IP).

Release on GitHub and on the CRAN

This topic is also well covered in Hadley’s book, so I suggest you read this chapter.

A release is an achievement, you have reached a milestone and the package works great, so you are basically spreading the news “I/we’ve reached a milestone guys, here it is!”. Doing a release is as simple as changing the number of the version in the DESCRIPTION file. That said, there are ways of making it more official and more useful.

First on GitHub, you can create releases. After editing the version number in DESCRIPTION, you can push and create a release and even add a DOI with the version via Zenodo. You can also submit your package to the CRAN or Bioconductor which demands a little more work.

Either way, at this stage you must choose a version number. Easy peasy? Yes, but this choice does matter. It is all about making the numbers used as meaningful as possible to provide useful landmarks as you keep on developing your package. Semantic Versioning does a great work at explaining this, check this website out! I also suggest that you spend some time thinking about how useful it is to make the version number converging to pi.

I have no experience submitting my package to Bioconductor. I can only tell about the CRAN. I’ll be brief as you can read how to do so in Hadley’s book:

-

You must test your package on the main platforms (MacOS, Windows, Linux) and report warnings/notes if any. Travis and AppVeyor I introduced above are very useful for this, if they are part of your workflow, basically this step is done, I however recommend to use win-builder (one command line

devtools::build_win()) and also to have a look at the r-hub builder. -

You must write the results in

cran-comments.mdwhere you must mention if it is your first submission and if your package has downstream dependencies.

Below is the most recent one for rcites:

|

|

Once done, you are ready to submit, so thanks to devtools, just one command line:

|

|

After this, you’ll receive an email (the one you wrote in the maintainer field of DESCRIPTION) to confirm the submission. You may receive emails from people that manage the CRAN because of issues or potential improvements. So far, they have given me one good advice: using \donttest{} instead of commenting out examples that cannot be tested on the CRAN.

Why submit your package to a peer review process?

Let’s assume your package is already on GitHub and the built passes on both Travis and Appveyor and that the code coverage is above 90%, is it enough? Does that mean you wrote a good package? What is a good package? To me, a good package does not need to be among most downloaded ones but rather one that is well coded. There are a set of criteria that can actually be objectively evaluated:

-

good practice: is your package compliant to good practices? Given the number of ways R offers to do the same things, e.g.

<-or=to assign a value to a variable (that puzzles some people), it is recommended to follow good practice. goodpractice checks if your package follows them. For instance,goodpractice::gp()checks that you are using<-rather than=, that your lines of code do not exceed 80 character, that you are usingTRUEandFALSEinstead ofTandF, etc.. -

readability is your code well-commented and easy to understand? This determines how easy it would be for new contributors to understand your code and thus how easy it would be for them to contribute. Note that for efficiency purposes, some steps may sometimes be hard to understand, in such case, you must comment how you did it and why, this is also relevant for the future you.

-

Dependencies: there is nothing wrong with depending on other packages. However, being parsimonious and using adequate package matter (the Occam’s razor version for packages). The first release of rcites imported data.table. During the review of the package Noam Ross suggested that data.table is actually used for efficiency purposes and that in the context of rcites, it was an extra dependency for no real benefice, which is true, so we changed this.

-

Code redundancy: is your code a bunch of copy paste or is it well-designed so that it avoids redundancy and makes the debug efficient? This may sound technical but a code well-structured is also a code that is easy to debug and to further develop.

-

Efficiency is your code efficient? There are many tips given by Colin Gillespie and Robin Lovelace in Efficient R programming, have a look!

-

User experience Never wondered why Hadley’s packages are among the most popular ones? Well, he cares a lot about the users. I am sure he thinks a lot about how people will use a package and how to make it as intuitive as possible. Moreover, he exhaustively documents his works. This is a critical part. Making your package user friendly is about its design and documenting your package. Without a good documentation, you’ll miss your shot. Build vignettes! Explain carefully how your package works, build a website! You can no longer say that this is hard because pkgdown makes this real easy!

To evaluate all these aspects, you (still) need human reviewers! That’s why I value so much initiatives such as rOpenSci, their members review packages, advertise the packages they’ve reviewed, write tutorials and blog on what they are doing. Our experience was awesome, we had very positive feed back, very clever suggestions that made rcites a better package. I would love to say more about it but guess what, the review process in 100% transparent, so check out what really happened => . You may wonder whether your package is in the scope of rOpenSci? How to submit your package? Well, they are completing a book to answer all this question, check it out! If your package is not in rOpenSci book, you have other options such as The Journal of Open Source Software (JOSS) or Journal of statistical software. Note that rOpenSci has Software Review Collaboration with Methods in Ecology and Evolution and with JOSS. So in our case, it meant that our JOSS paper was accepted after the review completed by rOpenSci.

There are thousands of packages on the CRAN, I personally think that it is worth sending packages of good quality (again quality means that the package does what it was meant to do and does it well). After this experience, I’ll always do my best to get reviews of the code before sending the package to the CRAN. People are sometimes wondering how to trust an R package, getting packages peer-reviewed is definitively a good solution. For now on, my personal approach with R packages will be:

- Development of GitHub (or similar);

- Review;

- Development until package accepted;

- Submit first version to CRAN;

- Advertise , blog, etc.

- Regular life cycle: new features/ fixing bugs / new release on CRAN when ready / keep advertising.

Curated list of resources mentioned in the post

Packages

Builders

Documentation

- “Writing an R package from scratch” by Hilary Parker (that actually is the first thing I read on this topic)

- Writing R extension, on the CRAN

- R packages by Hadley Wickham

- rOpenSci Packages: Development, Maintenance, and Peer Review

- Advanced R by Hadley Wickham

- Efficient R programming by Colin Gillespie Robin Lovelace

- Pro Git

Journals

- R Journal

- The Journal of Open Source Software (JOSS)

- Methods in Ecology and Evolution (MEE), it partnered up with rOpenSci