Creating a monorepo of R packages with GitHub

November 23, 2023

R github github actions monorepo docker

In some situations, using a monorepo for R packages is desirable. The key to make it work is to create a reliable workflow to check all your packages when needed. monoRepoR exemplifies the monorepo strategy.

Table of Contents

Context

As an R developer who often works on open source project, I normally use one GitHub repository for new R packages. Team of R developers that work on several packages use organization accounts to gather packages that have a common scope. Popular accounts of this kind include:

That said, there are situations where using a monorepo for a bunch of R packages is useful. Two situations come to my mind. First, there are strong interdependencies among your packages, such as a couple of packages developed for a Shiny app. Second, you only have one repository because that’s how much your CTO trusts you!

As far as I am aware, monorepo is not a common practice for R packages. The fantastic r-universe initiative uses this approach and relies on submodules to create a monorepo for your organization that includes all your R packages, it then applies a common pipeline to those packages (e.g. see https://github.com/r-universe/insileco). This is very powerful and very well designed.

In this post I am presenting how to set up a monorepo that includes two packages with one that relies on the other. As a bonus 🎉, at the end of the post, I explain how to include the two packages in a docker file.

Setting up the repository with two R packages

The first step is to create two packages within the repository. To do so, I leverage the R package usethis as follows:

|

|

I have now created testpkg01 and testpkg02 that are basically folders at the root of the repository. For the sake of this example, I add one function in both packages. In testpkg01 I add hello_co2():

|

|

that I call in testpkg02:

|

|

testpkg01 is therefore added to the list of dependencies in the DESCRIPTION file of testpkg02:

|

|

Checking the packages with GitHub Actions

Now for what I believe is the trickiest part of the post: to work with R packages efficiently, packages must be checked on a regular basis. A CI/CD service such as GitHub Actions does that.

As I am using a monorepo, I need to find a way to check all R packages using a common workflow. GitHub Actions has one feature called reusable workflow that covers our needs. A reusable workflow is very similar to a regular one but it has inputs to be declared. I created R-CMD-check that takes a string input pkg-path:

|

|

The rest of the workflow are steps borrowed from r-lib/actions and I use working-directory: ${{ inputs.pkg-path }} to apply them to the right package. There is however a subtlety to consider, as I need testpkg01 for testpkg02. This means that testpkg01 must be installed. As the package is included in the monorepo, I simply list it as an extra local packages to be installed:

|

|

Note, however, that this only works if all packages are at the same level.

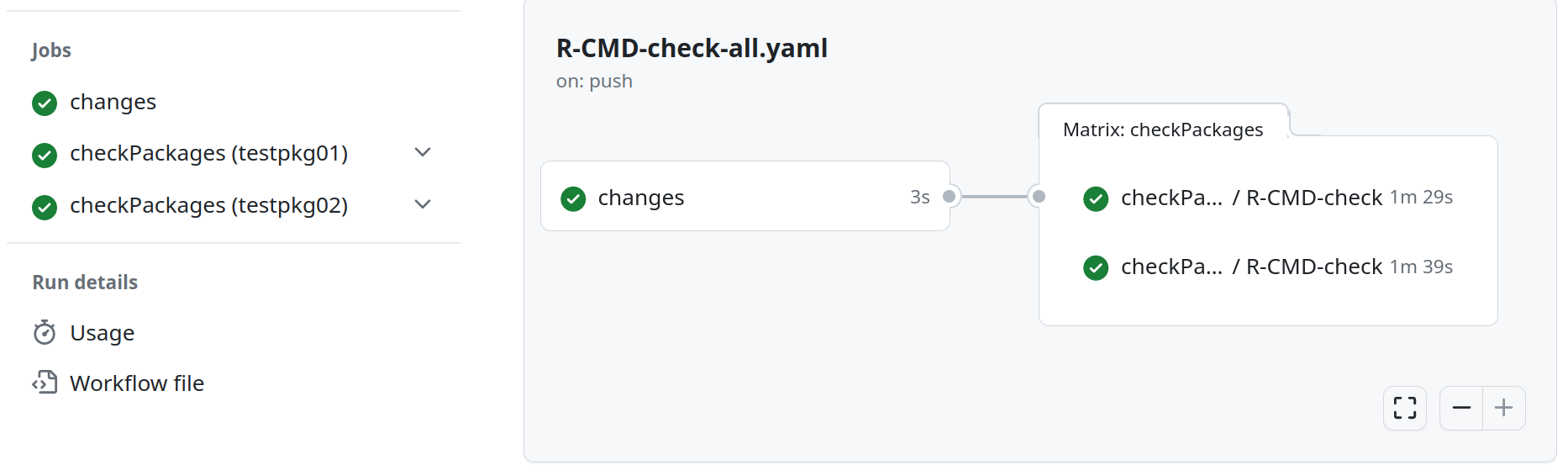

I now create the main workflow that will call the re-usable package. For this workflow, only the packages with changes should be checked. For instance, if a pull request contains only changes in test01pkg, test02pkg does not need to be checked. That is possible with path filtering and in my workflow I use dorny/paths-filter. The main workflow R_CMD-check-all includes two jobs: changes and checkPackages. changes leverages dorny/paths-filter to return a list of package names based on what has changed.

|

|

If any files in the folder testpkg01 ("testpkg01/**") change, then testpkg01 will be in the output list. Similarly, if any file in testpkg02 change or if any file in testpkg01/R change then testpkg02 will make the output list. The latter rule is super useful because it allows us to check that testpkg02 was not affected by the changes in testpkg01. The list of outputs needs.changes.outputs.packages is then passed to the next job checkPackages that won’t be triggered if changes is not completed.

|

|

I further add a check to prevent any jobs to run if the list is empty and I run 1 job in parallel (in this case there is no specific reason to do so). checkPackages will then convert needs.changes.outputs.packages in a matrix and call R-CMD-check.yaml for each package in the list. The workflow is now ready 🎉!

Adding the two packages in a Docker container

This is the bonus part of the post where I add the two packages in a Docker image that I push to DockerHub using GitHub Actions. This is not a post about Docker. Still, in a nutshell, docker is an incredible technology to create a virtual container for your application that can be run on any OS. This makes your application easy to share, easy to deploy and easy to scale (with the help of other technologies).

To create my docker image with the two packages, I create a very simple dockerfile.

|

|

I first pull the base image from the fantastic rocker repository. Then I ensure that apt packages are up to date. Next, I copy the *.tar.gz files (created for both packages using with R CMD build) as well as a minimal script. To complete the image build, I run the script to install the two packages.

|

|

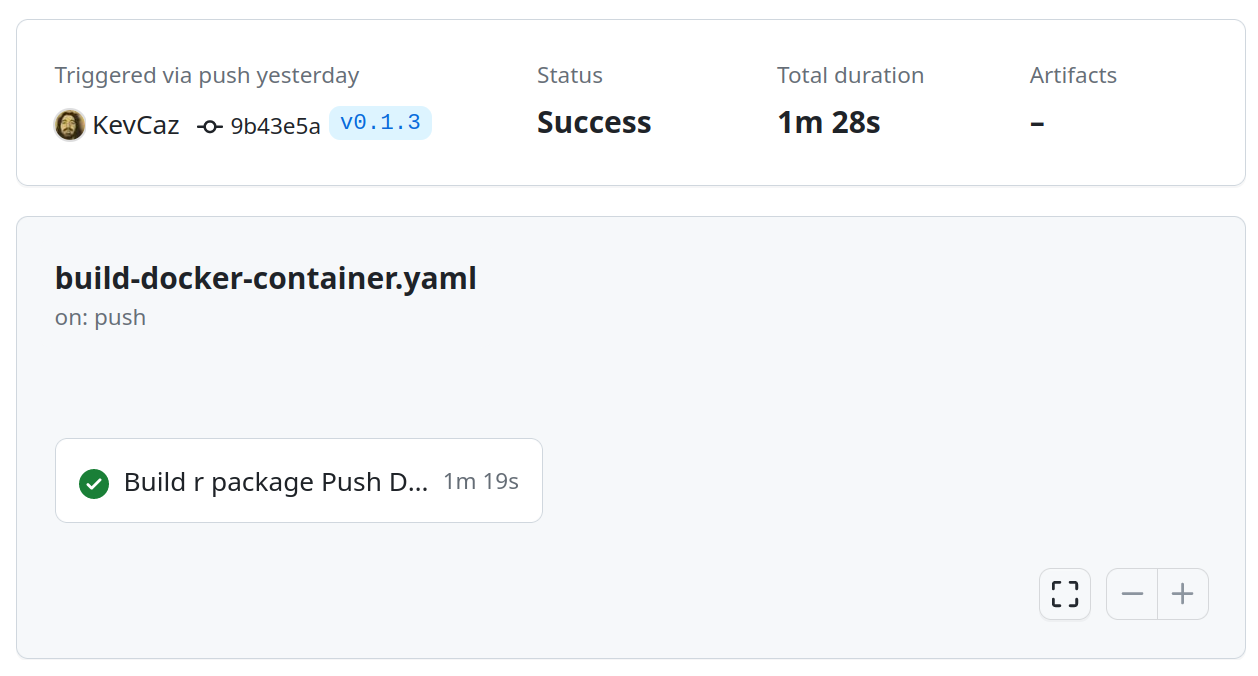

I design a last workflow build-and-release based on the one available in the documentation page ‘Publishing Docker images’. It generates the *.tar.gz files, creates the docker image and pushes it to my DockerHub repo. Also, this workflow is only triggered when a tag starting with “v” is added. For me, this means every commit that is a new version, pretty neat!